Posted

Testing code is hard, and no matter how hard you test it there is no test like shipping it to production. The amount of use, edge cases and stress found in production is beyond what you can imagine in your automated tests. So let’s talk about the best ways to test code in production.

Feature flags are an industry-standard approach to managing the rollout of new features. You add the new code behind a feature flag then can turn on and off the flag to switch between the new and old versions.

if feature_enabled("do new thing"):

new_code()

else:

old_code()

The idea is simple, but feature flags have some major problems.

Implementing the flag is usually manual. This means that it is easy to accidentally modify the existing behaviour when adding the new code.

Let’s take an example function:

def get_user(cookie):

decoded = jwt.decode(cookie, key=SIGNING_KEY)

user = User.get(decoded.data.user_id)

if decoded.meta.issued < user.tokens_revoked:

clear_login_cookie()

return None

return user

And add a new feature behind a feature-flag:

def get_user(cookie):

decoded = jwt.decode(cookie, key=SIGNING_KEY)

if feature_enabled("revocation cache"):

tokens_revoked = cache_get(decoded.data.user_id)

else:

user = User.get(decoded.data.user_id)

tokens_revoked = maybe_user.tokens_revoked

if decoded.meta.issued < tokens_revoked:

clear_login_cookie()

return None

return User.get(decoded.data.user_id)

Did you see the bug? The new version fetches User from the database twice for a valid token. If this code runs for every request it could easily overload the database. The author wanted to share the cookie-clearing code and was thinking about what the code would look like after the flag was removed. But they failed to thoroughly consider the performance of the old path. Other common errors include failing to initialize variables on some paths or forgetting to properly clear a variable between uses on one path.

Often it is best to just duplicate all affected code. It is pretty simple in this self-contained example but can be a huge mess when the change affects multiple functions. This is also a problem for review as it can be hard to tell what “new” lines are actually new vs the lines that are just copies of the previous code with slightly different interfaces or other changes.

Often a feature flag will implicitly depend on another feature flag being enabled (or disabled). This can result in issues if you try to roll back the “base” flag not knowing about the dependant flag.

Having multiple implementations of some features makes it difficult to run tests. If you test with the feature flag disabled you are shipping untested code to production but if you test with the flag enabled you aren’t testing the fallback.

You might want to test both versions but doing this manually is tedious and error-prone. But testing each configuration becomes expensive if you have 100 feature flags (especially if some of them interact).

Feature flags are 90% testing in prod. You use a feature flag to sneak the code past the tests then slowly ramp it up in prod while squinting at your monitoring carefully.

What if we just used regular releases to test features?

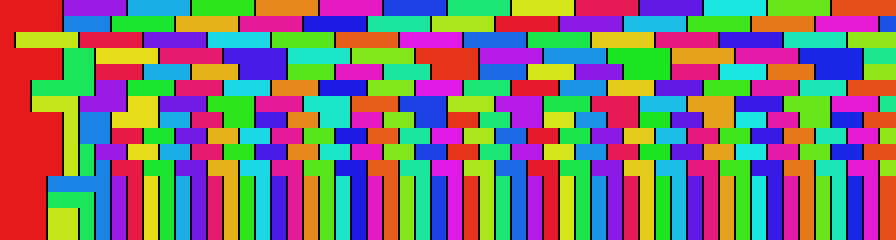

Instead of releasing once a week with feature-flags in place we just release once a day (or once an hour). However instead of a quick 0% → 10% → 100% rollout we extend the lifetime. For example imagine that every day we release a new version to a fraction of traffic and remove the oldest version.

This is a simple linear rollout scheme, but other schemes can start slower and accelerate once confidence is built.

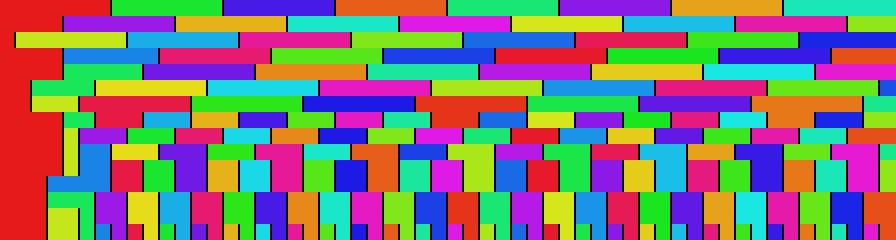

Or you can combine exponential with a holdback so that you have at least 7 days of history running. This can help identify when a problem was introduced.

Version skew tolerance is effectively mandatory. Since your change will always be running next to older code for a while it needs to be compatible otherwise it will be rejected.

People often try to skirt this issue which is fundamental to distributed systems (which any website is) and it can go largely unnoticed until a major incident occurs and rollbacks only cause more failures. Running a spread of versions at the same time thoroughly tests this scenario so that it can be caught and resolved before disaster strikes.

Unlike feature-flagging every change is automatically tested with a slow rollout. There is no need to add noise to code reviews with conditionals, flag names and duplicating code. Even large type-level refactors that touch most of the codebase can be tested.

Time-to-production is an important metric for developer productivity. Even 1% of production traffic exposure is enough to start collecting some basic metrics, logs and other information to find out how a feature is working. With support for routing select users to specific versions they can fully test their new code or even enable it for a customer which is having issues.

By limiting the traffic the risk of production deploy is reduced, so shipping quick is acceptable.

Because each version only contains one implementation of each feature you can simply run integration tests and get good coverage.

The flip side is that because rollouts are slow old code will run for longer. This means that cleaning up old data, database schemas or otherwise will have to wait until the last version using them is completely obsolete (not running and not valuable as a possible rollback target).

Almost all of your monitoring needs to support easy breakdown by version. This is because when you start seeing an error you will want to see what versions are affected. If the problem only appears in some versions you can resolve the issue by rolling everything to a working version.

There are also upsides to the more complex monitoring. Incident response times can be significantly improved because it is easier to identify the last good version. Especially if you have a holdback you can just see which of the holdback versions have the issue. This makes it quickly identify if a rollback will help and which version need to be rolled back to. This also makes it quicker to identify the actual commit that caused the issues as you only need to look at the changes in a small release instead of a large release.